Matematica si Informatica in limba

Engleza

Traffic shaping. QoS application

Chapter 1

1.1 Introduction

The

past three centuries have been dominated by the progress of technology. The

18th century was the era of the great mechanical systems and the Industrial

Revolution. The 19th century was the age of the steam engine. During the 20th

century, the technology was gathering information, processing, and distribution

of it. Among other developments, we saw the birth and unprecedented growth of

the computer industry.

The history of computer networking is complex, involving

many people from all over the world over the past thirty years. In the 1940s,

computers were huge electromechanical devices that were prone to failure. In

1947, the invention of a semiconductor transistor opened up many possibilities

for making smaller, more reliable computers. In the 1950s, mainframe computers,

run by punched card programs, began to be commonly used by large institutions.

In the late 1950s, the integrated circuit - that combined several, many, and

now millions, of transistors on one small piece of semiconductor - was

invented. Through the 1960s, mainframes with terminals were common place, and

integrated circuits became more widely used.

In the

late 60s and 70s, smaller computers, called minicomputers (though still huge by

today's standards), came into existence. In 1978, the Apple Computer company

introduced the personal computer. In 1981, IBM introduced the open-architecture

personal computer. The user friendly Mac, the open architecture IBM PC, and the

further micro-miniaturization of integrated circuits lead to widespread use of

personal computers in homes and businesses. As the late 1980s began, computer

users - with their stand-alone computers - started to share data (files) and

resources (printers). People asked, why not connect them?

Starting

in the 1960s and continuing through the 70s, 80s, and 90s, the Department of

Defense (DoD) developed large, reliable, wide area networks (WANS). Some of

their technology was used in the development of LANs, but more importantly, the

DoDs WAN eventually became the Internet.

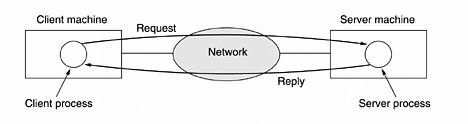

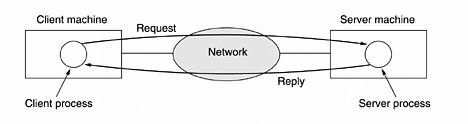

Somewhere

in the world, there were two computers that wanted to communicate with each

other. In order to do so, they both needed some kind of device that could talk

to the computers and the media (the Network Interface Card - NIC), and

some way for the messages to travel (medium).

Suppose, also, that the

computers wanted to communicate with other computers that were a great distance

away. The answer to this problem came in the form of repeaters and hubs. The

repeater (an old device used by telephone networks) was introduced to enable

computer data signals to travel farther. The multi-port repeater, or hub,

was introduced to enable a group of users to share files, servers and

peripherals. You might call this a workgroup

network.

Soon, work groups

wanted to communicate with other work groups. Because of the functions of hubs

(they broadcast all messages to all ports, regardless of destination), as the

number of hosts and the number of workgroups grew, there were larger and larger

traffic jams. The bridge was

invented to segment the network, to introduce some traffic control.

The

best feature of the hub - concentration /connectivity - and the best feature of

the bridge - segmentation - were combined to produce a switch. It had lots of ports, but allowed each port to pretend it

had a connection to the other side of the bridge, thus allowing many users and

lots of communications.

In the

mid-1980s, special-purpose computers, called gateways (and then routers)

were developed. These devices allowed the interconnection of separate LANs. Internetworks were created. The DoD

already had an extensive internetwork, but the commercial availability of

routers - which carried out best path

selections and switching for data

from many protocols - caused the explosive growth of networks .

As the chart shows,

the Web has experienced three growth stages:

- 1991-1997: Explosive growth, at a rate of 850%

per year.

- 1998-2001: Rapid growth, at a rate of 150%

per year.

- 2002-2006: Maturing growth, at a rate of 25%

per year.

The

number of Internet websites each year since the Web's

founding.

Today

many companies have a substantial number of computers . For example an ISP can

have multiple computers in different locations. The main computer can be

situated in one region and the others in the neighbourhood. Making the data

available to anyone on the network without regard to the physical location of

the resource and the user is called resource sharing. The data is stored on

powerfull computer called server. The

employees have simpler machines, called clients, with which they access

remote data.

Broadly

speaking, there are two types of transmission technology that are in widespread

use. They are as follows:

Broadcast links.

Point-to-point links.

Broadcast

networks have a single communication channel

that is shared by all the machines on the network. Short messages, called packets

in certain contexts, sent by any machine are received by all the others. An

address field within the packet specifies the intended recipient. Upon

receiving a packet, a machine checks the address field. If the packet is intended

for the receiving machine, that machine processes the packet; if the packet is

intended for some other machine, it is just ignored.

Broadcast

systems generally also allow the possibility of addressing a packet to all destinations by using a special code in the

address field. When a packet with this code is transmitted, it is received and

processed by every machine on the network in the same time. This mode of

operation is called broadcasting. Some broadcast systems also support

transmission to a subset of the machines, and this is known as multicasting.

When a packet is sent to a certain group, it is delivered to all machines

subscribing to that group.

Point-to-point networks consist of many connections between individual pairs of

machines. To go from the source to the destination, a packet on this type of

network may have to first visit one or more intermediate machines. Often

multiple routes, of different lengths, are possible, so finding good ones is

important in point-to-point networks. Point-to-point transmission with one

sender and one receiver is called unicasting.

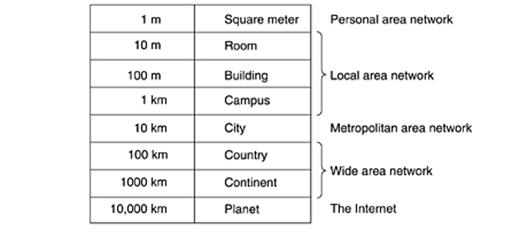

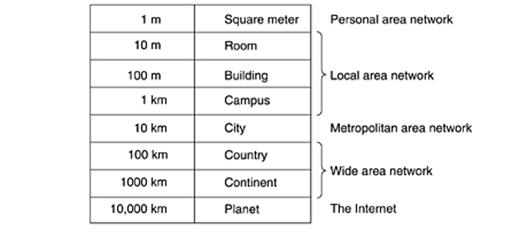

1.2 Classification of networks

A

criteria for classifying networks is their scale. At the top are the personal area

networks, networks that are meant for one person. For example, a wireless

network connecting a computer with its mouse, keyboard, and printer is a

personal area network. Beyond the personal area networks come longer-range

networks. These can be divided into local, metropolitan, and wide area

networks. Finally, the connection of two or more networks is called an

internetwork. The worldwide Internet is a well-known example of an internetwork

1.2.1 Local Area Networks

Local

area networks, generally called LANs,

are networks within a single building or campus of up to a few kilometers in

size. They are widely used to connect personal computers and workstations in

offices or at home to share resources (e.g., printers) and exchange

information. LANs are distinguished from other kinds of networks by three

characteristics:

(1) their size,

(2) their transmission

technology ,

(3) their topology.

LANs may

use a transmission technology consisting of a cable to which all the machines

are attached. Traditional LANs run at speeds of 10 Mbps to 100 Mbps, have low

delay (microseconds or nanoseconds), and make very few errors.

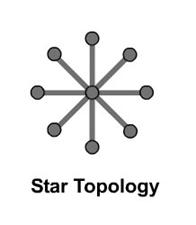

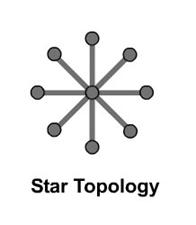

LANs may

have various topologies . By

topology we undestant the structure of the network. The

topolgy can be devided in two parts, the physical topology, which is the actual

layout of the wire (media), and the logical topology, which defines how the

media is accessed by the hosts. The physical topologies that are commonly used

are the Bus, Ring and Star.

In a star topology, each network device has

a lot of cable which connects to the central node , giving each device a

separate connection to the network. If there is a problem with a cable, it will

generally not affect the rest of the network. The most common cable media in

use for star topologies is unshielded twisted pair copper cabling. Category 3

is still found frequently in older installations. It is capable of 10 megabits

per second data transfer rate, making it suitable for only 10 BASE T Ethernet. Most new installations use Category 5 cabling.

It is capable of data transfer rates of 100 megabits per second, enabling it to

employ 100 BASE T Ethernet, also

known as Fast Ethernet. More

importantly, the brand new 1000 BASE T Ethernet standard will be able to run

over most existing Category 5. Finally, fiber optic cable can be used to

transmit either 10 BASE T or 100 BASE T Ethernet frames.

When using Category 3 or 5 twisted pair cabling,

individual cables cannot exceed 100 meters.

Advantages

- More suited for larger networks

- Easy to expand network

- Easy to troubleshoot because problem usually

isolates itself

- Cabling types can be mixed

Disadvantages

- Hubs become a

single point of network failure, not the cabling

- Cabling more

expensive due to home run needed for every device

Best used in small area

of network. In the bus topology the

cable runs from computer to computer making each computer a link of a chain.

Different types of cable determine

way of network can be connected.

Advantages of a Linear Bus Topology

- Easy to connect a computer or peripheral to a

linear bus.

- Requires less cable length than a star topology.

Disadvantages of a Linear Bus Topology

- Entire network shuts down if there is a break in

the main cable.

- Terminators are required at both ends of the

backbone cable.

- Difficult to identify the problem if the entire

network shuts down.

- Not meant to be used as a stand-alone solution in

a large building.

Ring topologies are used on token ring networks. Each device processes

and retransmits the signal, so it is capable of supporting many devices in a

somewhat slow but very orderly fashion. A token, or small data packet, is

continuously passed around the network. When a device needs to transmit, it

reserves the token for the next trip around, then attaches its data packet to

it. The receiving device sends back the packet with an acknowledgment of

receipt, then the sending device puts the token back out on the network. Most

token ring networks have the physical cabling of a star topology and the

logical function of a ring through use of multi

access units (MAU). In a ring topology, the network signal is passed

through each network card of each device and passed on to the next device. All

devices have a cable home runned back to the MAU. The MAU makes a logical ring

connection between the devices internally. When each device signs on or off, it

sends an electrical signal which trips mechanical switches inside the MAU to

either connect the device to the ring or drop it off the ring. The most common

type of cabling used for token ring networks is twisted pair. Transmission

rates are at either 4 or 16 megabits per second.

Advantages

- Very orderly network where every device has access

to the token and the opportunity to transmit

- Performs better than a star topology under heavy

network load

- Can create much larger network using Token Ring

Disadvantages

- One malfunctioning workstation or bad port in the

MAU can create problems for the entire network

- Moves, adds and changes of devices can affect the

network

- Network adapter cards and MAU's are much more

expensive than Ethernet cards and hubs

- Much slower than

an Ethernet network under normal load

1.2.2 Metropolitan Area Networks

Metropolitan Area Networks called MAN are optimized

for a larger geographical area than is a LAN, ranging from several blocks of

buildings to entire cities. As with local networks, MANs can also depend on

communications channels of moderate-to-high data rates. They typically use

wireless infrastructure or optical fiber connections for linking.

A

MAN might be owned and operated by a single organization, but it usually will

be used by many individuals and organizations. MANs might also be owned and

operated as public utilities. They will often provide means for internetworking

of local networks.

1.2.3 Wide Area Networks

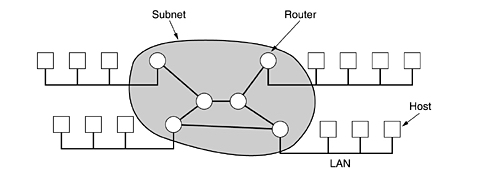

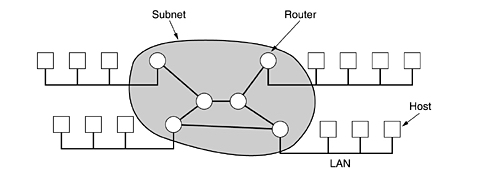

A wide area network, or WAN, spans a large geographical area, often a

country or continent. It contains a collection of machines intended for running

user (i.e., application) programs. We will follow traditional usage and call

these machines hosts.

The hosts are connected by a communication

subnet, or just subnet for

short. The job of the subnet is to carry messages from host to host.

In this model each host is frequently connected to a LAN

on which a router is present, although in some cases a host can be connected

directly to a router. The collection of communication lines and routers (but

not the hosts) form the subnet.

Relation between hosts on LANs

and the subnet.

In most

WANs, the network contains numerous transmission lines, each one connecting a

pair of routers. If two routers that do not share a transmission line wish to

communicate, they must do this indirectly, via other routers. When a packet is

sent from one router to another via one or more intermediate routers, the

packet is received at each intermediate router in its entirety, stored there until

the required output line is free, and then forwarded. A subnet organized

according to this principle is called a store-and-forward or packet-switched

subnet. Nearly all wide area networks (except those using satellites) have

store-and-forward subnets. When the packets are small and all the same size,

they are often called cells.

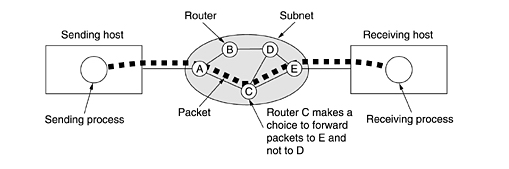

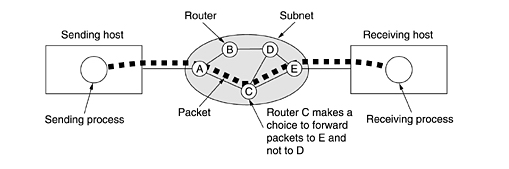

The

principle of a packet-switched WAN is so important that it is worth devoting a

few more words to it. Generally, when a process on some host has a message to

be sent to a process on some other host, the sending host first cuts the

message into packets, each one bearing its number in the sequence. These

packets are then injected into the network one at a time in quick succession.

The packets are transported individually over the network and deposited at the

receiving host, where they are reassembled into the original message and

delivered to the receiving process. A stream of packets resulting from some

initial message is illustrated below:

In the figure above, all the packets follow the route ACE, rather

than ABDE

or ACDE.

In some networks all packets from a given message must

follow the same route; in others each packet is routed separately. Of course,

if ACE

is the best route, all packets may be sent along it, even if each packet is

individually routed.

Routing decisions are made locally. When a packet arrives at router A,itis up to A to

decide if this packet should be sent on the line to B

or the line to C. How A

makes that decision is called the routing algorithm.

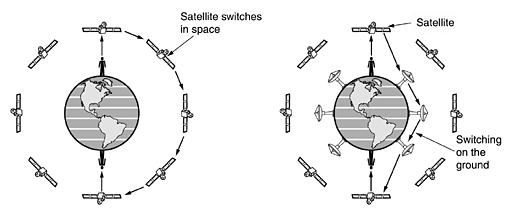

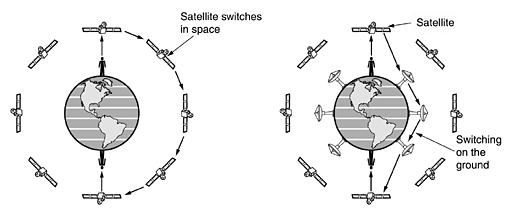

Not all WANs are packet switched. A second possibility for a WAN is a

satellite system. Each router has an antenna through which it can send and

receive. All routers can hear the output from

the satellite, and in some cases they can also hear the upward transmissions of

their fellow routers to the satellite as well.

Sometimes the routers are connected to a substantial point-to-point subnet,

with only some of them having a satellite antenna.

1.2.4. Wireless networks

Digital wireless communication is not a new idea. As early as 1901, the

Italian physicist Guglielmo Marconi demonstrated a ship-to-shore wireless

telegraph, using Morse Code (dots and dashes are binary, after all). Modern

digital wireless systems have better performance, but the basic idea is the

same.

Wireless networks can be divided into three main categories:

System interconnection.

Wireless LANs. (WLAN)

Wireless WANs. (WWAN)

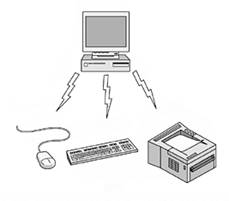

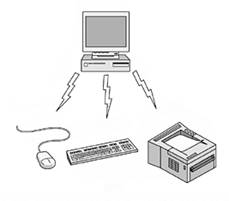

System interconnection is all about interconnecting the components of a compute using

short-range radio. Almost every computer has a monitor, keyboard, mouse, and

printer connected to the main unit by cables. Some companies got together to

design a short-range wireless network called Bluetooth

to connect these components without wires. Bluetooth

also allows digital cameras, headsets, scanners, and other devices to connect

to a computer by merely being brought within range. No cables, no driver

installation, just put them down, turn them on, and they work. Many people,

prefer this facility.

In the simplest form, system interconnection networks use the

master-slave paradigm (see the figure below). The system unit is normally the

master, talking to the mouse, keyboard, etc., as slaves. The master tells the

slaves what addresses to use, when they can broadcast, how long they can transmit,

what frequencies they can use, and so on.

Bluetooth/Wireless

connection

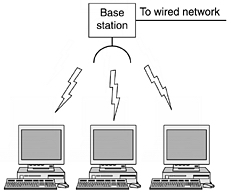

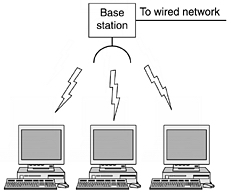

Wireless LANs are systems in which every computer has a radio modem and antenna with

which it can communicate with other systems. Often there is an antenna on the

ceiling that the machines talk to. If the systems are close enough, they can

communicate directly with one another in a peer-to-peer configuration.

Wireless LANs are becoming increasingly common in small offices and

homes, where we don't want to install

Ethernet and get rid of the cables.

Wirless LAN

Wide Area Systems . The radio

network used for cellular telephones is an example of a low-bandwidth wireless

system. Cellular wireless networks are like wireless LANs, except that the

distances involved are much greater and the bit rates much lower. Wireless LANs

can operate at rates up to about 50 Mbps over distances of tens of meters.

Cellular systems operate below 1 Mbps, but the distance between the base

station and the computer or telephone is measured in kilometers rather than in

meters.

Example of wide area systems

Home Networks

Home networking is on the horizon. The fundamental idea

is that in the future most homes will be set up for networking. Every device in

the home will be capable of communicating with every other device, and all of

them will be accessible over the Internet. This is one of those visionary

concepts that nobody asked for (like TV remote controls or mobile phones), but

once they arrived nobody can imagine how they lived without them.

Many devices are capable of being networked. Some of the

more obvious categories (with examples) are as follows:

Computers (desktop PC, notebook PC, PDA, shared peripherals).

Entertainment (TV, DVD, VCR, camcorder, camera, stereo, MP3).

Telecommunications (telephone, mobile telephone, intercom, fax).

Appliances (microwave, refrigerator, clock, aircooler, lights).

Home computer networking is already here in a limited way. Many homes

already have a device to connect multiple computers to a fast Internet

connection. Networked entertainment is not quite here, but as more and more

music and movies can be downloaded from the Internet, there will be a demand to

connect stereos and televisions to it. Also, people will want to share their

own videos with friends and family, so the connection will need to go both

ways. Telecommunications gear is already connected to the outside world, but

soon it will be digital and go over the Internet. Finally, remote monitoring of

the home and its contents is a likely winner. Probably many parents would be

willing to spend some money to monitor their sleeping babies on their PDAs when

they are eating out.

Home networking has some fundamentally different properties than other

network types. First, the network and devices have to be easy to install. The

author has installed numerous pieces of hardware and software on various

computers over the years, with mixed results. A series of phone calls to the

vendor's helpdesk typically resulted in answers like (1) Read the manual, (2)

Reboot the computer, (3) Remove all hardware and software except ours and try

again, (4) Download the newest driver from our Web site, and if all else fails,

(5) Reformat the hard disk and then reinstall Windows from the CD-ROM. Telling

the purchaser of an Internet refrigerator to download and install a new version

of the refrigerator's operating system is not going to lead to happy customers.

1.2.6 Internetworks

Many networks exist in the world, often with different hardware and software.

People connected to one network often want to communicate with people attached

to a different one. The fulfillment of this desire requires that different, and

frequently incompatible networks, be connected, sometimes by means of machines

called gateways to make the connection and

provide the necessary translation, both in terms of hardware and software. A

collection of interconnected networks is called an internetwork or internet. A

common form of internet is a collection of LANs connected by a WAN.

An internetwork is formed when distinct networks are interconnected.

1.3 OSI Reference model

To reduce their design complexity, most networks are organized as a

stack of layers.

Layer n on one machine carries on a

conversation with layer n on another machine. The rules and conventions used in this

conversation are collectively known as the layer n

protocol. Basically, a protocol is an agreement between the communicating

parties on how communication is to proceed.

A set of layers and protocols is called

a network architecture.

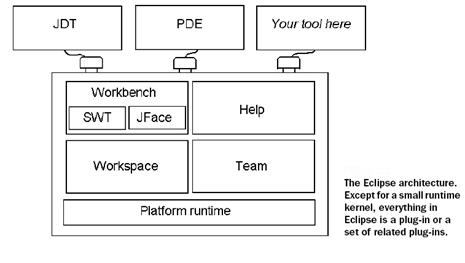

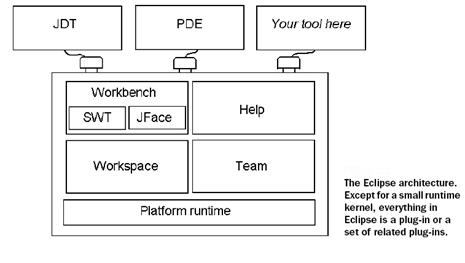

For this we will explain the OSI

reference model which is based on on a proposal developed by the

International Standards Organization (ISO) . The model is called the ISO OSI (Open Systems Interconnection) Reference Model

because it deals with connecting open systems-that is, systems that are open

for communication with other systems . The OSI model has seven layers.

The OSI Reference Model

Physical Layer

The physical layer, the lowest

layer of the OSI model, is concerned with the transmission and reception of the

unstructured raw bit stream over a physical medium. It describes the

electrical/optical, mechanical, and functional interfaces to the physical

medium, and carries the signals for all of the higher layers. It provides:

Data encoding: modifies the simple digital signal

pattern (1s and 0s) used by the PC to better accommodate the characteristics of

the physical medium, and to aid in bit and frame synchronization. It determines:

What

signal state represents a binary 1

How

the receiving station knows when a "bit-time" starts

How

the receiving station delimits a frame

Physical medium attachment, accommodating various

possibilities in the medium:

Transmission technique: determines whether the

encoded bits will be transmitted by baseband (digital) or broadband (analog)

signaling.

Physical medium transmission: transmits bits as

electrical or optical signals appropriate for the physical medium, and

determines:

What

physical medium options can be used

How

many volts/db should be used to represent a given signal state, using a given

physical medium

Data Link Layer

The

data link layer provides error-free transfer of data frames from one node to

another over the physical layer, allowing layers above it to assume virtually

error-free transmission over the link. To do this, the data link layer

provides:

Link

establishment and termination: establishes and terminates the logical link

between two nodes.

Frame

traffic control: tells the transmitting node to "back-off" when no frame

buffers are available.

Frame

sequencing: transmits/receives frames sequentially.

Frame

acknowledgment: provides/expects frame acknowledgments.

Detects and recovers from errors that occur in the

physical layer by retransmitting non-acknowledged frames and handling duplicate

frame receipt.

Frame

delimiting: creates and recognizes frame boundaries.

Frame

error checking: checks received frames for integrity.

Media

access management: determines when the node "has the right" to use the physical

medium.

Network Layer

The

network layer controls the operation of the subnet, deciding which physical

path the data should take based on network conditions, priority of service, and

other factors. It provides:

Routing:

routes frames among networks.

Subnet

traffic control: routers (network layer intermediate systems) can instruct a

sending station to "throttle back" its frame transmission when the router's

buffer fills up.

Frame

fragmentation: if it determines that a downstream router's maximum transmission

unit (MTU) size is less than the frame size, a router can fragment a frame for

transmission and re-assembly at the destination station.

Logical-physical

address mapping: translates logical addresses, or names, into physical addresses.

Subnet

usage accounting: has accounting functions to keep track of frames forwarded by

subnet intermediate systems, to produce billing information.

Transport Layer

The

transport layer ensures that messages are delivered error-free, in sequence, and

with no losses or duplications. It relieves the higher layer protocols from any

concern with the transfer of data between them and their peers.

The size and complexity of a transport protocol depends on the type of

service it can get from the network layer. For a reliable network layer with

virtual circuit capability, a minimal transport layer is required. If the

network layer is unreliable and/or only supports datagrams, the transport

protocol should include extensive error detection and recovery.

The transport

layer provides:

Message

segmentation: accepts a message from the (session) layer above it, splits the

message into smaller units (if not already small enough), and passes the

smaller units down to the network layer. The transport layer at the destination

station reassembles the message.

Message acknowledgment: provides reliable

end-to-end message delivery with acknowledgments.

Message traffic control: tells the transmitting

station to "back-off" when no message buffers are available.

Session multiplexing: multiplexes several message

streams, or sessions onto one logical link and keeps track of which messages

belong to which sessions (see session layer).

Typically, the transport layer can accept relatively large messages, but

there are strict message size limits imposed by the network (or lower) layer.

Consequently, the transport layer must break up the messages into smaller

units, or frames, pretending a header to each frame.

The transport

layer header information must then include control information, such as message

start and message end flags, to enable the transport layer on the other end to

recognize message boundaries. In

addition, if the lower layers do not maintain sequence, the transport header

must contain sequence information to enable the transport layer on the

receiving end to get the pieces back together in the right order before handing

the received message up to the layer above.

Session Layer

The

session layer allows session establishment between processes running on

different stations. It provides:

Session

establishment, maintenance and termination: allows two application processes on

different machines to establish, use and terminate a connection, called a

session.

Session

support: performs the functions that allow these processes to communicate over

the network, performing security, name recognition, logging, and so on.

Presentation Layer

The

presentation layer formats the data to be presented to the application layer.

It can be viewed as the translator for the network. This layer may translate data from a format

used by the application layer into a common format at the sending station, and

then translate the common format to a format known to the application layer at

the receiving station.

The presentation layer

provides:

Character

code translation: for example, ASCII to EBCDIC. (Extended Binary

Coded Decimal Interchange Code

Data

conversion: bit order, integer-floating point.

Data

compression: reduces the number of bits that need to be transmitted on the

network.

Data

encryption: encrypt data for security purposes. For example, password

encryption.

Application Layer

The

application layer serves as the window for users and application processes to

access network services. This layer contains a variety of commonly needed functions:

Resource

sharing and device redirection

Remote

file access

Remote

printer access

Inter-process

communication

Network

management

Directory

services

Electronic

messaging (such as mail)

Network

virtual terminals

By creating specifications on multiple layers, the OSI model has a

lot of benefits:

Reduced complexity allows faster evolution. There are companies

specialized in creating products specific for one layer, instead of rebuilding

everything from the application to the physical layer.

Interoperability is much easier due to standardization.

Each layer uses the service of the layer immediately below it, and

so it is easier to remember what the lower layer does.

It simplifies teaching. For example, network administrators need

to know the functions of the lowest four layers, while programmers need to know

the upper layers.

Units and metrics

To avoid any confusion metric units are used instead of traditional

units. The principal metric prefixes are listed in the figure below. The

prefixes are typically abbreviated by their first letters, with the units

greater than 1 capitalized (KB, MB, etc.). One exception (for historical

reasons) is kbps for kilobits/sec. Thus, a 1-Mbps communication line transmits

106 bits/sec and a 100 psec (or 100 ps) clock ticks every 10-10

seconds. Since milli and micro both begin with the letter ''m,'' a choice had

to be made. Normally, ''m'' is for milli and ''µ'' (the Greek letter mu) is for

micro.

|

Exp

|

Explicit

|

Prefix

|

Exp

|

Explicit

|

Prefix

|

|

|

mili

|

|

|

Kilo

|

|

|

micro

|

|

|

Mega

|

|

|

nano

|

|

|

Giga

|

|

|

pico

|

|

|

Tera

|

|

|

femto

|

|

|

Peta

|

|

|

atto

|

|

|

Exa

|

|

|

zepto

|

|

|

Zetta

|

|

|

yocto

|

|

|

Yotta

|

Here

kilo means 210 (1024) rather than 103 (1000) because memories are always a power of two .

Thus:

1-KB memory contains 210(1024) bytes, not

1000 bytes.

1-MB memory contains 220 (1,048,576) bytes

1-GB memory contains 230 (1,073,741,824) bytes

1-TB database contains 240 (1,099,511,627,776)

bytes

However,

a 1-kbps communication line transmits

1000 bits per second and a 10-Mbps LAN runs at 10,000,000 bits/sec because these speeds are not powers of two.

1.5 Introduction to traffic shaping

and routing

The traditional service model of the internet

is called best effort, which means that the network will do

the best it can to send packets to the receiver as quickly as possible, but

there are no guarantees. In a non-QoS-enabled IP network

all packets generally receive the same best-effort service. Quality of Service (QoS) is the

technique used to treat different packets differently.

As computer network grew , the needs of new

multimedia services such as video conferencing and streaming audio arose. If you have ever whitnessed your interactive Internet applications

experiencing network delays, it becomes clear that best effort is often not

good enough. Some flows need preferential treatment. Fortunately, the

possibility exists to handle different flows of packets differently; to

recognize that some traffic requires low latency or a rate guarantee for the

best user experience this is why sometimes is called traffic control.

Let's

say that in the case we have two computers, or two users sharing the same

uplink to the Internet, one is using BitTorrent for downloading files and another

tries to read his e-mail. The first one may be able to fill up the output queue

on the router than the data can be transmitted across the link, at which point

the router starts to drop packets , and the second one can be faced with packet

loss and high latency. By creating traffic rules and separating the internal

queues used to service these two different classes of application, there can be

better sharing of the network resource between the two applications.

Traffic control is the set of tools which

allows the user to have granular control over these queues and the queuing

mechanisms of a networked device. The power to rearrange traffic flows and

packets with these tools is tremendous and can be complicated, but is no

substitute for adequate bandwidth.

Packet-switched

networks differ from circuit based networks in one very important regard. A

packet-switched network itself is stateless. A circuit-based network (such as a

telephone network) must hold state within the network. IP networks are stateless

and packet-switched networks by design; in fact, this statelessness is one of

the fundamental strengths of IP.

The

weakness of this statelessness is the lack of differentiation between types of

flows. In simplest terms, traffic control allows an administrator to queue

packets differently based on attributes of the packet. It can even be used to

simulate the behaviour of a circuit-based network. This introduces statefulness

into the stateless network.

There

are many practical reasons to consider traffic control, and many scenarios in

which using traffic control makes sense. Below are some examples of common

problems which can be solved or at least ameliorated with these tools.

The

list below is not an exhaustive list of the sorts of solutions available to

users of traffic control, but introduces the types of problems that can be

solved by using traffic control to maximize the usability of a network

connection.

Common traffic

control solutions

- Limit total bandwidth to a known rate; TBF, HTB with child

class(es).

- Limit the bandwidth of a particular

user, service or client; HTB classes and classifying with a filter. traffic.

- Maximize TCP throughput on an

asymmetric link; prioritize transmission of ACK packets, wondershaper.

- Reserve bandwidth for a particular

application or user; HTB with children

classes and classifying.

- Prefer latency sensitive traffic; PRIO inside an HTB class.

- Managed oversubscribed bandwidth; HTB with borrowing.

- Allow equitable distribution of

unreserved bandwidth; HTB with borrowing.

- Ensure that a particular type of

traffic is dropped; policer attached

to a filter with a drop action.

- Do routing based on user id, MAC

address, source IP address, port, type of service, time of day or content

- Restrict access to your computers

- Limit access of your users to other

hosts

- Protect the Internet from your

customers

- Protect your network from DoS attacks

- Remember, too that sometimes, it is

simply better to purchase more bandwidth. Traffic control does not solve

all problems!

Advantages

When

properly employed, traffic control should lead to more predictable usage of

network resources and less volatile contention for these resources. The network

then meets the goals of the traffic control configuration. Bulk download

traffic can be allocated a reasonable amount of bandwidth even as higher

priority interactive traffic is simultaneously serviced. Even low priority data

transfer such as mail can be allocated bandwidth without tremendously affecting

the other classes of traffic.

In

a larger picture, if the traffic control configuration represents policy which

has been communicated to the users, then users (and, by extension,

applications) know what to expect from the network.

Disdvantages

Complexity

is easily one of the most significant disadvantages of using traffic control.

There are ways to become familiar with traffic control tools which ease the

learning curve about traffic control and its mechanisms, but identifying a

traffic control misconfiguration can be quite a challenge.

Traffic

control when used appropriately can lead to more equitable distribution of

network resources. It can just as easily be installed in an inappropriate

manner leading to further and more divisive contention for resources.

The

computing resources required on a router to support a traffic control scenario

need to be capable of handling the increased cost of maintaining the traffic

control structures. Fortunately, this is a small incremental cost, but can

become more significant as the configuration grows in size and complexity.

For

personal use, there's no training cost associated with the use of traffic

control, but a company may find that purchasing more bandwidth is a simpler

solution than employing traffic control. Training employees and ensuring depth

of knowledge may be more costly than investing in more bandwidth.

Although

traffic control on packet-switched networks covers a larger conceptual area,

you can think of traffic control as a way to provide [some of] the statefulness

of a circuit-based network to a packet-switched network.

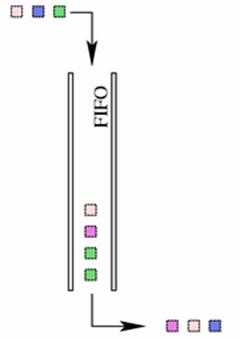

Queues

form the backdrop for all of traffic control and are the integral concept

behind scheduling. A queue is a location (or buffer) containing a finite number

of items waiting for an action or service. In networking, a queue is the place

where packets (our units) wait to be transmitted by the hardware (the service).

In the simplest model, packets are transmitted in a first-come first-serve

basis. In the discipline of computer networking (and more generally computer

science), this sort of a queue is known as a FIFO.

Without

any other mechanisms, a queue doesn't offer any promise for traffic control.

There are only two interesting actions in a queue. Anything entering a queue is

enqueued into the queue. To remove an item from a queue is to dequeue that

item.

A

queue becomes much more interesting when coupled with other mechanisms which

can delay packets, rearrange, drop and prioritize packets in multiple queues. A

queue can also use subqueues, which allow for complexity of behaviour in a

scheduling operation.

From

the perspective of the higher layer software, a packet is simply enqueued for

transmission, and the manner and order in which the enqueued packets are

transmitted is immaterial to the higher layer. So, to the higher layer, the

entire traffic control system may appear as a single queue. It is only by

examining the internals of this layer that the traffic control structures

become exposed and available.

Flows

A

flow is a distinct connection or conversation between two hosts. Any unique set

of packets between two hosts can be regarded as a flow. Under TCP the concept

of a connection with a source IP and port and destination IP and port

represents a flow. A UDP flow can be similarly defined.

Traffic

control mechanisms frequently separate traffic into classes of flows which can

be aggregated and transmitted as an aggregated flow. Alternate mechanisms may

attempt to divide bandwidth equally based on the individual flows.

Flows become important

when attempting to divide bandwidth equally among a set of competing flows,

especially when some applications deliberately build a large number of flows.

Tokens and buckets

Two

of the key underpinnings of a shaping mechanisms are the interrelated

concepts of tokens and buckets.

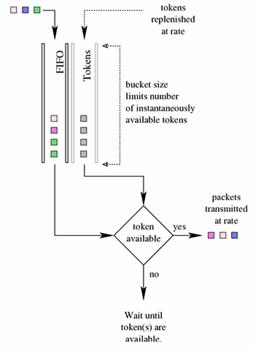

In order to control

the rate of dequeuing, an implementation can count the number of packets or

bytes dequeued as each item is dequeued, although this requires complex usage

of timers and measurements to limit accurately. Instead of calculating the

current usage and time, one method, used widely in traffic control, is to

generate tokens at a desired rate, and only dequeue packets or bytes if a token

is available.

Consider

the analogy of an amusement park ride with a queue of people waiting to

experience the ride. Let's imagine a track on which carts traverse a fixed

track. The carts arrive at the head of the queue at a fixed rate. In order to

enjoy the ride, each person must wait for an available cart. The cart is

analogous to a token and the person is analogous to a packet. Again, this

mechanism is a rate-limiting or shaping mechanism. Only a certain number of

people can experience the ride in a particular period.

To

extend the analogy, imagine an empty line for the amusement park ride and a

large number of carts sitting on the track ready to carry people. If a large

number of people entered the line together many (maybe all) of them could

experience the ride because of the carts available and waiting. The number of

carts available is a concept analogous to the bucket. A bucket contains a

number of tokens and can use all of the tokens in bucket without regard for

passage of time.

And

to complete the analogy, the carts on the amusement park ride (our tokens)

arrive at a fixed rate and are only kept available up to the size of the

bucket. So, the bucket is filled with tokens according to the rate, and if the

tokens are not used, the bucket can fill up. If tokens are used the bucket will

not fill up. Buckets are a key concept in supporting bursty traffic such as

HTTP.

The

TBF qdisc is a classical

example of a shaper .The TBF generates rate tokens and only transmits packets when a token is available. Tokens

are a generic shaping concept.

In

the case that a queue does not need tokens immediately, the tokens can be

collected until they are needed. To collect tokens indefinitely would negate

any benefit of shaping so tokens are collected until a certain number of tokens

has been reached. Now, the queue has tokens available for a large number of

packets or bytes which need to be dequeued. These intangible tokens are stored

in an intangible bucket, and the number of tokens that can be stored depends on

the size of the bucket.

This

also means that a bucket full of tokens may be available at any instant. Very

predictable regular traffic can be handled by small buckets. Larger buckets may

be required for burstier traffic, unless one of the desired goals is to reduce

the burstiness of the flows.

In summary, tokens are

generated at rate, and a maximum of a bucket's worth of tokens may be

collected. This allows bursty traffic to be handled, while smoothing and

shaping the transmitted traffic.

The

concepts of tokens and buckets are closely interrelated and are used in both TBF (one of the classless qdiscs) and HTB (one of the classful qdiscs). Within

the tc language, the use of two- and three-color meters is indubitably a

token and bucket concept.

Packets and

frames

The

terms for data sent across network changes depending on the layer the user is

examining. The word frame is typically used to describe a layer 2 (data link)

unit of data to be forwarded to the next recipient. Ethernet interfaces, PPP

interfaces, and T1 interfaces all name their layer 2 data unit a frame. The

frame is actually the unit on which traffic control is performed.

Chapter 2

2.1 Theoretical Basis for Data

Communication

Information can be

transmitted on wires by varying some physical property such as voltage or

current. By representing the value of this voltage or current as a

single-valued function of time, f(t), we can model

the behavior of the signal and analyze it mathematically.

2.1.1 Fourier Analysis

In the early 19th

century, the French mathematician Jean-Baptiste Fourier proved that any

reasonably behaved periodic function, g(t) with period T can be constructed as the sum of a (possibly

infinite) number of sines and cosines:

where f = 1/T is

the fundamental frequency, an and bn are the sine and

cosine amplitudes of the n th harmonics (terms), and c is a constant. Such a

decomposition is called a Fourier series. From the Fourier series, the function can be

reconstructed; that is, if the period, T, is known and the amplitudes

are given, the original function of time can be found by performing the sums of

this eq.

A data signal that

has a finite duration (which all of them do) can be handled by just imagining

that it repeats the entire pattern over and over forever (i.e., the interval

from T

to 2T

is the same as from 0 to T, etc.).

The an

amplitudes can be computed for any given g(t) by multiplying both sides of the

equation by sin(2πkft) and then integrating from 0 to T. Since:

only one term of the summation survives: an.

The bn

summation vanishes completely. Similarly, by multiplying the first equation by cos(2πkft)

and integrating between 0 and T, we can

derive bn.

By just integrating both sides of the equation as it stands, we can find c. The

results of performing these operations are as follows:

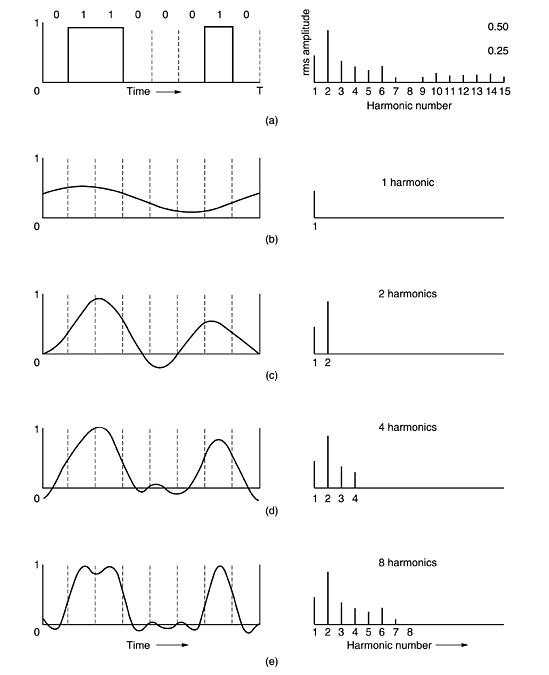

2.1.2 Bandwidth limited signals

To see what all this has to do with data communication, let us consider

a specific example: the transmission of the ASCII character ''b'' encoded in an

8-bit byte. The bit pattern that is to be transmitted is 01100010. The

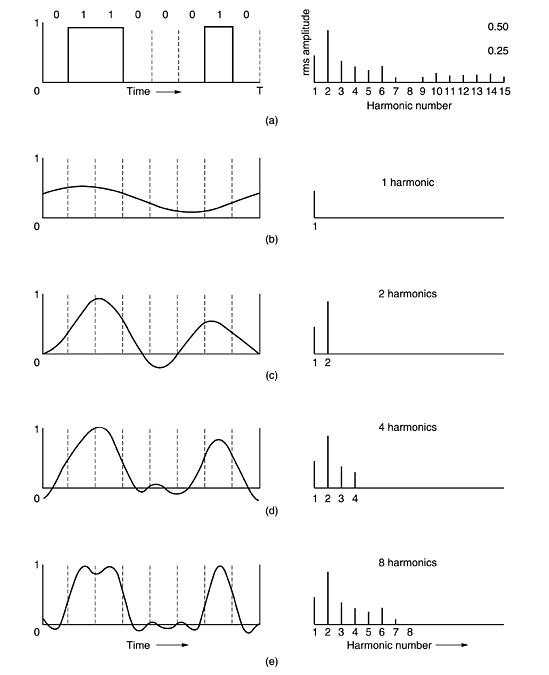

left-hand part of the figure below shows the voltage output by the transmitting

computer. The Fourier analysis of this signal yields the coefficients:

(a) A binary signal and its root-mean-square Fourier

amplitudes.

(b)-(e) Successive approximations to the original signal.

The

root-mean-square amplitudes,  , for the first few terms are shown on the right-hand side of the

figure (a) . These values are of interest because their squares are

proportional to the energy transmitted at the corresponding frequency.

, for the first few terms are shown on the right-hand side of the

figure (a) . These values are of interest because their squares are

proportional to the energy transmitted at the corresponding frequency.

Unfortunately, all

transmission facilities diminish different Fourier components by different

amounts, thus introducing distortion. Usually, the amplitudes are transmitted

undiminished from 0 up to some frequency fc [measured in cycles/sec or Hertz (Hz)] with all frequencies above

this cutoff frequency attenuated. The range of frequencies transmitted without

being strongly attenuated is called the bandwidth.

The bandwidth is a

physical property of the transmission medium and usually depends on the

construction, thickness, and length of the medium.

Now let us consider

how the signal of (a) would look if the bandwidth were so low that only the

lowest frequencies were transmitted [i.e., if the function were being

approximated by the first few terms of the first equation . Figure (b) shows

the signal that results from a channel that allows only the first harmonic (the

fundamental, f)

to pass through. Similarly, fig (c)-(e)

show the spectra and reconstructed functions for higher-bandwidth channels. Limiting the bandwidth limits the data

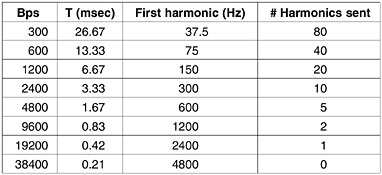

rate, even for perfect channels.

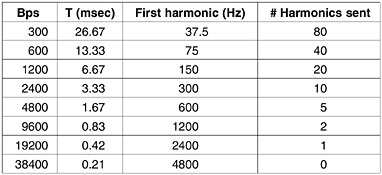

Relation between data rate and

harmonics

As early as 1924,

an AT&T engineer, Henry Nyquist, realized that even a perfect channel has a

finite transmission capacity. He derived an equation expressing the maximum

data rate for a finite bandwidth noiseless channel. In 1948, Claude Shannon

carried Nyquist's work further and extended it to the case of a channel subject

to random (that is, thermodynamic) noise (Shannon, 1948).

Nyquist proved that

if an arbitrary signal has been run through a low-pass filter of bandwidth H, the

filtered signal can be completely reconstructed by making only 2H

(exact) samples per second. Sampling the line faster than 2H times per second is pointless

because the higher frequency components that such sampling could recover have

already been filtered out. If the signal consists of V discrete levels, Nyquist's

theorem states:

For example, a

noiseless 3-kHz channel cannot transmit binary (i.e., two-level) signals at a

rate exceeding 6000 bps.

So far we have

considered only noiseless channels. If random noise is present, the situation

deteriorates rapidly. And there is always random (thermal) noise present due to

the motion of the molecules in the system. The amount of thermal noise present

is measured by the ratio of the signal power to the noise power, called the signal-to-noise

ratio. If we denote the signal power by S and the noise power by N, the

signal-to-noise ratio is S/N. Usually, the ratio itself is not quoted; instead, the

quantity 10  is given. These units are called

decibels

(dB). An S/N

ratio of 10 is 10 dB, a ratio of 100 is 20 dB, a ratio of 1000 is 30 dB, and so

on.

is given. These units are called

decibels

(dB). An S/N

ratio of 10 is 10 dB, a ratio of 100 is 20 dB, a ratio of 1000 is 30 dB, and so

on.

Shannon's major

result is that the maximum data rate of a noisy channel whose bandwidth is H Hz, and

whose signal-to-noise ratio is S/N, is given by:

2.2 . Principles of QoS

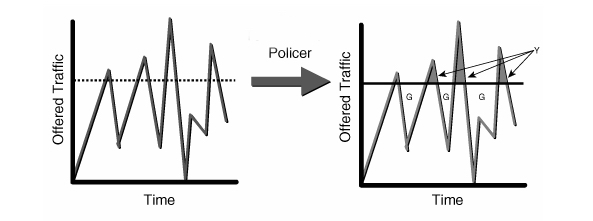

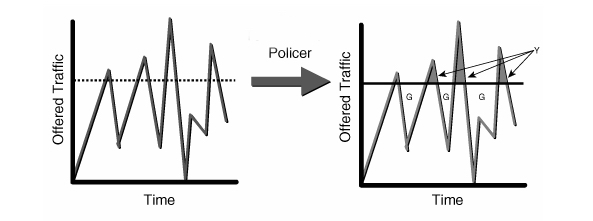

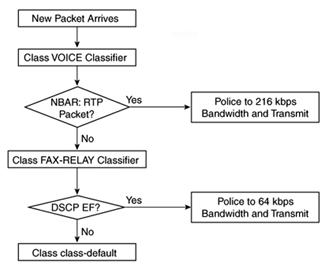

Policing

Policers measure

and limit traffic in a particular queue. It can drop or mark traffic

Policing, as an

element of traffic control, is simply a mechanism by which traffic can be

limited. Policing is most frequently used on the network border to ensure that

a peer is not consuming more than its allocated bandwidth. A policer will

accept traffic to a certain rate, and then perform an action on traffic

exceeding this rate. A rather harsh solution is to drop the traffic, although the traffic could be

reclassified instead of being dropped.

A policer is a

yes/no question about the rate at which traffic is entering a queue. If the

packet is about to enter a queue below a given rate, take one action (allow the

enqueuing). If the packet is about to enter a queue above a given rate, take

another action. Although the policer uses a token bucket mechanism internally,

it does not have the capability to delay a packet as a shaping mechanism does.

y - temporary bursts permited

only if there are unused tokens G

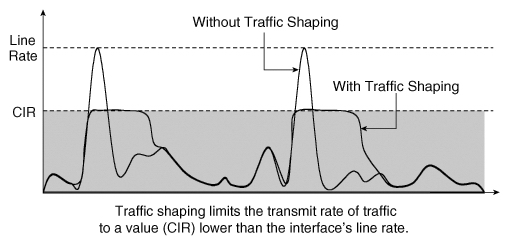

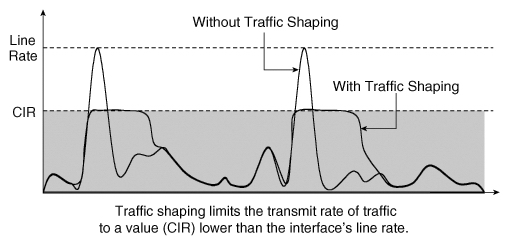

Shaping

Shapers delay

packets to meet a desired rate.

Shaping is the

mechanism by which packets are delayed before transmission in an output queue

to meet a desired output rate. This is one of the most common desires of users

seeking bandwidth control solutions. The act of delaying a packet as part of a

traffic control solution makes every shaping mechanism into a

non-work-conserving mechanism.

Viewed in reverse,

a non-work-conserving queuing mechanism is performing a shaping function. A

work-conserving queuing mechanism would not be capable of delaying a packet.

Shapers attempt to

limit or ration traffic to meet but not exceed a configured rate (frequently

measured in packets per second or bits/bytes per second). As a side effect,

shapers can smooth out bursty traffic. One of the advantages of shaping

bandwidth is the ability to control latency of packets. The underlying

mechanism for shaping to a rate is typically a token and bucket mechanism.

CIR

= Committed information rate, the policed rate

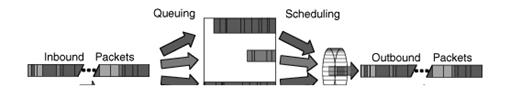

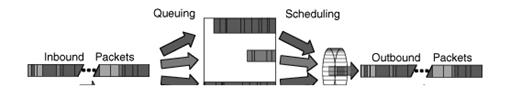

Scheduling

Schedulers arrange

and/or rearrange packets for output.

Scheduling is the mechanism by which packets

are arranged (or rearranged) between input and output of a particular queue. The

overwhelmingly most common scheduler is the FIFO (first-in first-out)

scheduler. From a larger perspective, any set of traffic control mechanisms on

an output queue can be regarded as a scheduler, because packets are arranged

for output.

Other generic scheduling

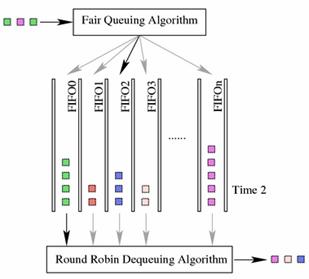

mechanisms attempt to compensate for various networking conditions. A fair

queuing algorithm (SFQ) attempts to prevent

any single client or flow from dominating the network usage. A round-robin

algorithm gives each flow or client a

turn to dequeue packets. Other sophisticated scheduling algorithms attempt to

prevent backbone overload (GRED) or refine other

scheduling mechanisms (ESFQ).

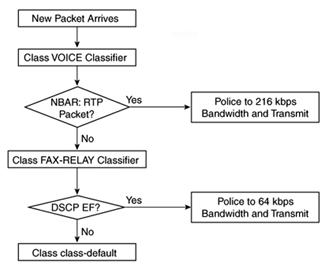

Classifying

Classifiers sort or

separate traffic into queues in other words the selection of traffic.

Classifying is the

mechanism by which packets are separated for different treatment, possibly

different output queues. During the process of accepting, routing and

transmitting a packet, a networking device can classify the packet a number of

different ways. Classification can include marking the packet, which usually happens on

the boundary of a network under a single administrative control or

classification can occur on each hop individually.

The Linux model allows for a packet to cascade

across a series of classifiers in a traffic control structure and to be

classified in conjunction with policers.

Dropping

Dropping discards

an entire packet, flow or classification.

Dropping a packet

is a mechanism by which a packet is discarded.

Marking

Marking is a

mechanism by which the packet is altered.

2.3 Kernel options and packages neeed

for traffic shaping

2.3.1 Kernel compilation options

If you've never setup traffic

shaping before, you'll need to enable traffic shaping, and check if your linux

has routing capabilites, too. Moreover, you'll need to enable netfilter support

for iptables if you haven't set that up yet, either.

The options listed below are taken from a

2.4.33.3 kernel source tree. If you

don't have the sources take your time and get them from www.kernel.org . I'm using a 2.4 kernel just

because it's rock-solid on Slackware/Debian and a lot of people will run 2.4

kernel for a long time to come and some of the changes to the system in a 2.6

kernel aren't necesarly to 2.4. The 2.6 kernel series shows a great deal of

improvment but it's still undergoing

heavy development and the stability of any given release can be

hit-or-miss.

Below are the options for setting your linux

box to forward packets (routing). The exact options may differ slightly from

kernel release to kernel release depending on patches and new schedulers and

classifiers.

The selections for

forwarding traffic on 2.6 series kernel are listed under Networking -> Networking

options

Networking options --->

<*>

Packet socket

[*] Packet socket: mmapped IO

<*> Netlink device

emulation

[*] Network packet filtering

(replaces ipchains)

[*] Socket Filtering

<*> Unix domain

sockets

[*] TCP/IP networking

[*] IP:

multicasting

[*] IP: advanced router

[*] IP: policy routing

[*] IP: use netfilter MARK value as routing

key

[*] IP: fast network address

translation

[*] IP: equal cost multipath

[*] IP: use TOS value as routing key

[*] IP: verbose route monitoring

[ ] IP: kernel level autoconfiguration

< > IP: tunneling

< > IP: GRE tunnels over IP

[ ] IP: multicast routing

[ ] IP: ARP daemon support (EXPERIMENTAL)

[ ] IP: TCP Explicit Congestion Notification

support

[*] IP: TCP syncookie support (disabled per

default)

Next step is to

enable the flow classifiers. You will need to enable th options selected below

to use Netfilter effectively to classify traffic flows and fou use for

firewalling, too

The selections for

Netfilter for a 2.6 series kernel are listed under Networking -> Networking

options -> Network packet filtering (replaces ipchains) -> IP: Netfilter

Configuration..

Networking options ---> IP:

Netfilter Configuration --->

<M>

Connection tracking (required for masq/NAT)

<M> Userspace queueing

via NETLINK

<M> IP tables support

(required for filtering/masq/NAT)

<M> limit match support

<M> IPP2P match support

<M> MAC address match support

<M> Packet type match support

<M> netfilter MARK match support

<M> Multiple port match support

<M> TOS match support

<M> recent match support

<M> ECN match support

<M> DSCP match support

<M> AH/ESP match support

<M> LENGTH match support

<M> TTL match support

<M> tcpmss match support

<M> stealth match support

<M> Unclean match support

<M> Owner match support

<M> Packet filtering

<M> REJECT target support

<M> MIRROR target support

<M> Packet mangling

<M> TOS target support

<M> ECN target support

<M> DSCP target support

<M> MARK target support

<M> LOG target support

<M> ULOG target support

<M> TCPMSS target support

<M> ARP tables

support

<M> ARP packet filtering

<M> ARP payload mangling

< > ipchains (2.2-style)

support

< > ipfwadm (2.0-style)

support

For classifing p2p

traffic I prefer to use the extension of iptables

called IPP2P. This is obtained by patching the actual surce code of the kernel

with the patch-o-matic-ng obtained

from https://ftp.netfilter.org/pub/patch-o-matic-ng/snapshot/ .

Now

it's time for enabling the traffic

control support . Many distributions provide kernels with modular or monolithic

support for traffic control (Quality of Service). Custom kernels may not

already provide support (modular or not).

The selections for

traffic control for a 2.6 series kernel are listed under Networking -> Networking

options -> QoS and/or fair queuing.

At a minimum you will want to enable the options selected below.

[*] QoS and/or fair

queueing

<M> CBQ packet scheduler

<M> HTB packet scheduler

<M> CSZ packet scheduler

<M> H-FSC packet scheduler

<M> The simplest PRIO pseudoscheduler

<M> RED queue

<M> SFQ queue

<M> TEQL queue

<M> TBF queue

<M> GRED queue

<M> Network emulator

<M> Diffserv field marker

<M> Ingress Qdisc

[*] QoS support

[*] Rate estimator

[*] Packet classifier API

<M> TC index classifier

<M> Routing table based classifier

<M> Firewall based classifier

<M> U32 classifier

<M> Special RSVP classifier

<M> Special RSVP classifier for IPv6

[*] Traffic policing (needed for

in/egress)

2.3.2 Netfilter iptables

Netfilter is a very important part of the Linux kernel in

terms of security, packet mangling, and manipulation. The front end for

netfilter is iptables, which 'tells' the kernel what the user wants

to do with the IP packets arriving into, passing through, or leaving the Linux

box.

The most used features of netfilter are packet

filtering and network address translation, but there are a lot of other things

that we can do with netfilter, such as packet mangling Layer 7 filtering. It can be obtained from ftp://ftp.netfilter.org/pub/iptables/

Optionally we need patch-o-matic-ng for adding the IPP2P extension. The goal of the IPP2P

is to identify peer-to-peer (P2P) data in IP traffic. Thereby IPP2P integrates

itself easily into existing Linux firewalls and it's functionality can be used

by adding appropriate filter rules. It can be obtained from https://www.ipp2p.org/

Netfilter has a few tables, each containing a default

set of rules, which are called chains. The default table loaded into the kernel

is the filter table, which contains three chains:

INPUT: Contains rules for packets destined to the Linux

machine itself.

FORWARD: Contains rules for packets that the Linux machine

routes to another IP address.

OUTPUT: Contains rules for packets generated by the Linux

machine.

The operations iptables can do

with chains are:

List the rules

in a chain (iptables -L CHAIN).

Change the policy

of a chain (iptables -P CHAIN ACCEPT).

Create a new

chain (iptables -N CHAIN).

Flush a chain;

delete all rules (iptables -F CHAIN).

Delete a chain

(iptables -D CHAIN), only if the

chain is empty.

Zero counters

in a chain (iptables -Z CHAIN). Every

rule in every chain keeps a counter of the number of packets and bytes it

matched. This command resets those counters.

For the -L, -F, -D, and -Z operations, if the chain name is

not specified, the operation is applied to the entire table, which if not

specified is by default the filter table. To specify the table on which we do

operations, we must use the -t switch like so iptables -t filter .

Operations that

iptables can execute on rules are:

Append rules to

a chain (iptables -A)

Insert rules in

a chain (iptables -I)

Replace a rule

from a chain (iptables -R)

Delete a rule

from a chain (iptables -D)

During run

time, you will prefer to use -I more

than -A because often you need to

insert temporary rules in the chain while iptables

-A places the rule at the end of the chain, while iptables -I places the rule on the top of the other rules in the

chain. However, you can insert a rule anywhere in the chain by specifying the

position where you want the rule to be in the chain with the -I switch:

iptables

-I CHAIN 4 will insert a rule at the fourth position of the specified

chain.

2.3.3 iproute2 tools (tc)

Iproute2 is

a suite of command line utilities which manipulate kernel structures for IP

networking configuration on a machine. Of the tools in the iproute2 package,

the binary tc is the only one used for traffic control . Because it interacts

with the kernel to direct the creation, deletion and modification of traffic

control structures, the tc binary needs to be compiled with support for all of

the qdiscs you wish to use. In particular, the HTB qdisc is not supported yet

in the upstream iproute2 package .

It can be obtained

from https://developer.osdl.org/dev/iproute2/

The ip

tool provides most of the networking configuration a Linux box needs. You can

configure interfaces, ARP, policy routing, tunnels, etc.

Now, with IPv4

and IPv6, ip can do pretty much anything (including a lot that we don't need in

our particular situations). The syntax of ip is not difficult, and there is a

lot of documentation on this subject. However, the most important thing is

knowing what we need and when we need it.

Let's have a look at the ip

command help to see what ip knows:

# ip help

Usage: ip [ OPTIONS ]

OBJECT

ip [ -force ] [-batch filename

where OBJECT :=

OPTIONS := |

-o[neline] | -t[imestamp] }

The ip link command shows the network

device's configurations that can be changed with ip link set. This command is used to modify the device's

proprieties and not the IP address.

The IP

addresses can be configured using the ip

addr command. This command can be used to add a primary or secondary

(alias) IP address to a network device (ip

addr add), to display the IP addresses for each network device (ip addr show), or to delete IP addresses

from interfaces (ip addr del). IP

addresses can also be flushed using different criteria, e.g. ip addr flush dynamic will flush all

routes added to the kernel by a dynamic routing protocol.

Neighbor/Arp

table management is done using ip

neighbor, which has a few commands expressively named add, change, replace, delete, and flush.

ip tunnel is used to manage tunneled connections. Tunnels can be gre, ipip, and sit.

The ip tool offers a way for monitoring

routes, addresses, and the states of devices in real-time. This can be

accomplished using ip monitor, rtmon, and rtacct commands included in the iproute2 package.

One very

important and probably the most used object of the ip tool is ip route, which can do any operations on

the kernel routing table. It has commands to add, change, replace, delete,

show, flush, and get routes.

One of the

things iproute2 introduced to Linux that ensured its popularity was policy

routing. This can be done using ip rule

and ip route in a few simple steps.

The tc command allows administrators to build different QoS

policies in their networks using Linux instead of very expensive dedicated QoS

machines. Using Linux, you can implement QoS in all the ways any dedicated QoS

machine can and even more. Also, one can make a bridge using a good PC running

Linux that can be transformed into a very powerful and very cheap dedicated QoS

machine.

2.4 Queueing disciplines for

bandwidth management

To prevent confusion, tc uses the following

rules for bandwith specification:

mbps = 1024 kbps = 1024 * 1024 bps => byte/s

mbit = 1024 kbit => kilo bit/s.

mb = 1024 kb = 1024 * 1024 b => byte

mbit = 1024 kbit => kilo bit.

but when it prints out the rates it will use:

1Mbit = 1024 Kbit = 1024 * 1024 bps => byte/s

With queueing we

determine the way in which data is SENT. It is important to realise that we can

only shape data that we transmit.

With the way the

Internet works, we have no direct control of what people send us. However, the Internet

is mostly based on TCP/IP which has a few features that help us. TCP/IP has no

way of knowing the capacity of the network between two hosts, so it just starts

sending data faster and faster ('slow start') and when packets start getting

lost, because there is no room to send them, it will slow down. In fact it is a

bit smarter than this, but more about that later.

If you have a

router and wish to prevent certain hosts within your network from downloading

too fast, you need to do your shaping on the *inner* interface of your router,

the one that sends data to your own computers.

You also have to be

sure you are controlling the bottleneck of the link. If you have a 100Mbit NIC

and you have a router that has a 256kbit link, you have to make sure you are

not sending more data than your router can handle. Otherwise, it will be the

router who is controlling the link and shaping the available bandwith. We need

to 'own the queue' so to speak, and be the slowest link in the chain. Luckily

this is easily possible.

As said, with

queueing disciplines, we change the way data is sent. Classless queueing disciplines are those that, by and large accept data

and only reschedule, delay or drop it.

These can be used to shape traffic for an

entire interface, without any subdivisions. It is vital that you understand

this part of queueing before we go on the classful qdisc-containing-qdiscs!

Each of these

queues has specific strengths and weaknesses and can be used as the primary

qdisc on an interface or can be used inside a leaf class of a classfull qdiscs.

Not all of them may be as well tested.

The Linux traffic

shaping implementation allows you to build arbitrarily complicated

configurations, based upon the building block of the qdisc. You can choose from

two kinds of qdiscs: classless and classful. By default, all Ethernet

interfaces get a classless qdisc for free, which is essentially a FIFO. You

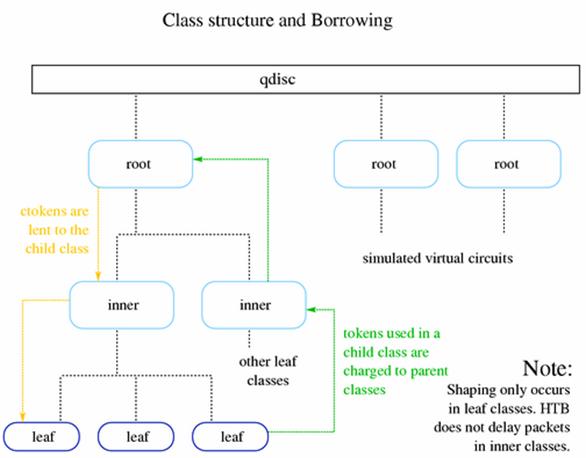

will likely replace this with something more interesting. Classful qdiscs, on

the other hand, can contain classes to arbitrary levels of depth. Leaf classes,

those which are not parents of additional classes, hold a default FIFO style

qdisc. Classless qdiscs are schedulers. Classful qdiscs are generally shapers,

but some schedule as well.

Each network

interface effectively has two places where you can attach qdiscs: root and

ingress. root is essentially a synonym for egress. The distinction between

these two hooks into Linux QoS is essential. The root hook sits on the inside

of your Ethernet interface. You can effectively apply traffic classification

and scheduling against the root hook as any queue you create is under your

control. The ingress hook rests on the outside of your Ethernet interface. You

cannot shape this traffic, but merely throttle it, because it has already

arrived.

2.4.1

Classless Queuing Disciplines (qdiscs)

Lets consider the

example below:

tc qdisc add dev eth2 parent

root handle 1:0 pfifo

First, we specify

that we want to work with a qdisc.

Next, we indicate we wish to add a

new qdisc to the Ethernet device eth2.

(You can specify del in place of add to remove the qdisc in question.)

Then, we specify the special parent root.

It is the hook on the egress side of your Ethernet interface. The handle is the magic userspace way of

naming a particular qdisc, but more on that later. Finally, we specify the

qdisc we wish to add. Because pfifo

is a classless qdisc, there is nothing more to do.

Qdiscs are always

referred to using a combination of a major node number and a minor node number.

For any given qdisc, the major node number has to be unique for the root hook

for a given Ethernet interface. The minor number for any given qdisc will

always be zero. By convention, the first qdisc created is named 1:0. You could,

however, choose 7:0 instead. These numbers are actually in hexadecimal, so any

values in within the range of 1 to ffff are also valid. For readability the

digits 0 to 9 are generally used exclusively.

2.4.1.1 FIFO, First-In First-Out

(pfifo and bfifo)

The FIFO algorithm forms the basis

for the default qdisc on all Linux network interfaces (pfifo_fast). It performs

no shaping or rearranging of packets. It simply transmits packets as soon as it

can after receiving and queuing them. This is also the qdisc used inside all

newly created classes until another qdisc or a class replaces the FIFO.

A FIFO qdisc must, however, have a size limit (a buffer size) to prevent

it from overflowing in case it is unable to dequeue packets as quickly as it

receives them. Linux implements two basic FIFO qdiscs, one based on bytes, and

one on packets. Regardless of the type of FIFO used, the size of the queue is

defined by the parameter limit. For a pfifo the unit is understood to be

packets and for a bfifo the unit is understood to be bytes.

tc

qdisc add dev eth0 handle 1:0 root dsmark indices 1 default_index 0

tc

qdisc add dev eth0 handle 2:0 parent 1:0 pfifo limit 30

2.4.1.2 pfifo_fast,

the default Linux qdisc

The pfifo_fast qdisc is the default qdisc for

all interfaces under Linux. Based on a conventional FIFO qdisc, this qdisc also

provides some prioritization. It provides three different bands (individual

FIFOs) for separating traffic. The highest priority traffic (interactive flows)

are placed into band 0 and are always serviced first. Similarly, band 1 is

always emptied of pending packets before band 2 is dequeued.

There is nothing configurable to the

end user about the pfifo_fast qdisc

2.4.1.3 SFQ,

Stochastic Fair Queuing

The SFQ qdisc attempts to fairly distribute opportunity to transmit data

to the network among an arbitrary number of flows. It accomplishes this by

using a hash function to separate the traffic into separate (internally

maintained) FIFOs which are dequeued in a round-robin fashion. Because there is

the possibility for unfairness to manifest in the choice of hash function, this

function is altered periodically. Perturbation (the parameter perturb) sets

this periodicity.

tc qdisc add dev eth0 handle 1:0

root dsmark indices 1 default_index 0

tc qdisc add dev eth0 handle 2:0

parent 1:0 sfq perturb 10

2.4.1.4 Other

classless qdiscs (ESFQ,GRED,TBF)

ESFQ, Extended Stochastic Fair Queuing

Conceptually, this qdisc is no different than

SFQ although it allows the user to control more parameters than its simpler

cousin. This qdisc was conceived to overcome the shortcoming of SFQ identified

above. By allowing the user to control which hashing algorithm is used for

distributing access to network bandwidth, it is possible for the user to reach

a fairer real distribution of bandwidth.

tc qdisc add dev eth0 root esfq

perturb 10

GRED, Generic Random Early Drop

Theory declares that a RED algorithm is useful on a backbone or core

network with data rates over 100 Mbps, but not as useful near the end-user

TBF, Token Bucket Filter

This qdisc is built on tokens and buckets. It simply shapes traffic

transmitted on an interface. To limit the speed at which packets will be

dequeued from a particular interface, the TBF qdisc is the perfect solution. It

simply slows down transmitted traffic to the specified rate. Packets are only transmitted if there are

sufficient tokens available. Otherwise, packets are deferred. Delaying packets

in this fashion will introduce an artificial latency into the packet's round

trip time.

tc qdisc add dev eth0 handle 1:0

root dsmark indices 1 default_index 0

tc qdisc add dev eth0 handle 2:0

parent 1:0 tbf burst 20480 limit 20480 mtu 1514 rate 32000bps

2.4.2 Classfull qdisc

Now, let us look at

an example where we add a classful qdisc and a single class.

# tc qdisc add dev eth2 parent

root handle 1:0 htb default 1

# tc class add dev eth2

parent 1:0 classid 1:1 htb rate 1mbit

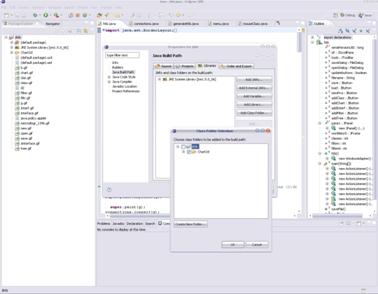

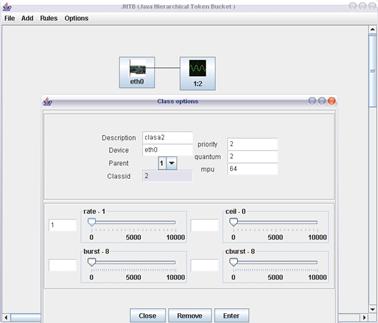

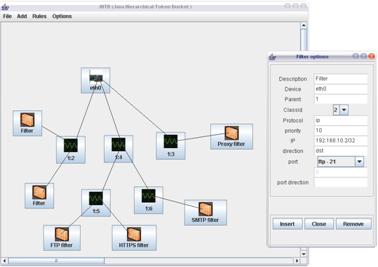

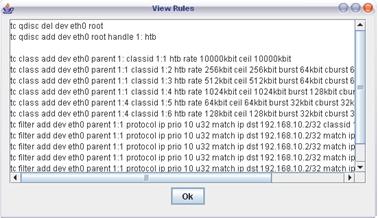

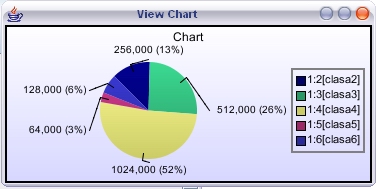

The first line